V100-R

High-Performance Robotic Motion Training & VLA Collaboration Solution

Precise kinematics, drive training, control, and performance optimization—trusted by innovators worldwide.

Why Choose MOXI’s V100-R for Humanoid Robot Training?

Scientifically Validated High-Precision Data

- Equipped with 15 nine-axis IMU sensors plus high-precision finger modules for full-body 360° motion tracking.

- Data accuracy within ±2°, combined with a 100Hz refresh rate, ensures continuous and reliable datasets for AI/ML training.

- Unconstrained by line-of-sight issues—unlike traditional RGB-D or LiDAR systems, it’s immune to lighting, occlusion, or metallic interference.

Easy Integration into Your Development Workflow

- Dedicated ROS2 SDK: Supports ROS2 integration with the NVIDIA Isaac ROS platform, accelerating robotic training and development.

- NVIDIA Ecosystem Compatibility: Fully compatible with Omniverse and Isaac Sim, enabling digital twin modeling, virtual training scenario generation, and simulation testing.

- Edge AI Inference: Optimized for NVIDIA Jetson platforms with TensorRT, delivering real-time motion analysis and control. Supports fully local IMU-based motion capture processing in offline environments.

- Standard Data Outputs: Provides TF, Joint State, and IMU data, with full support for URDF models and visualization tools such as RViz and TF Viewer.

- Modular, scalable architecture with a high-precision motion data library—ideal for AI training and biomimetic robotic control.

Real-World Application Value

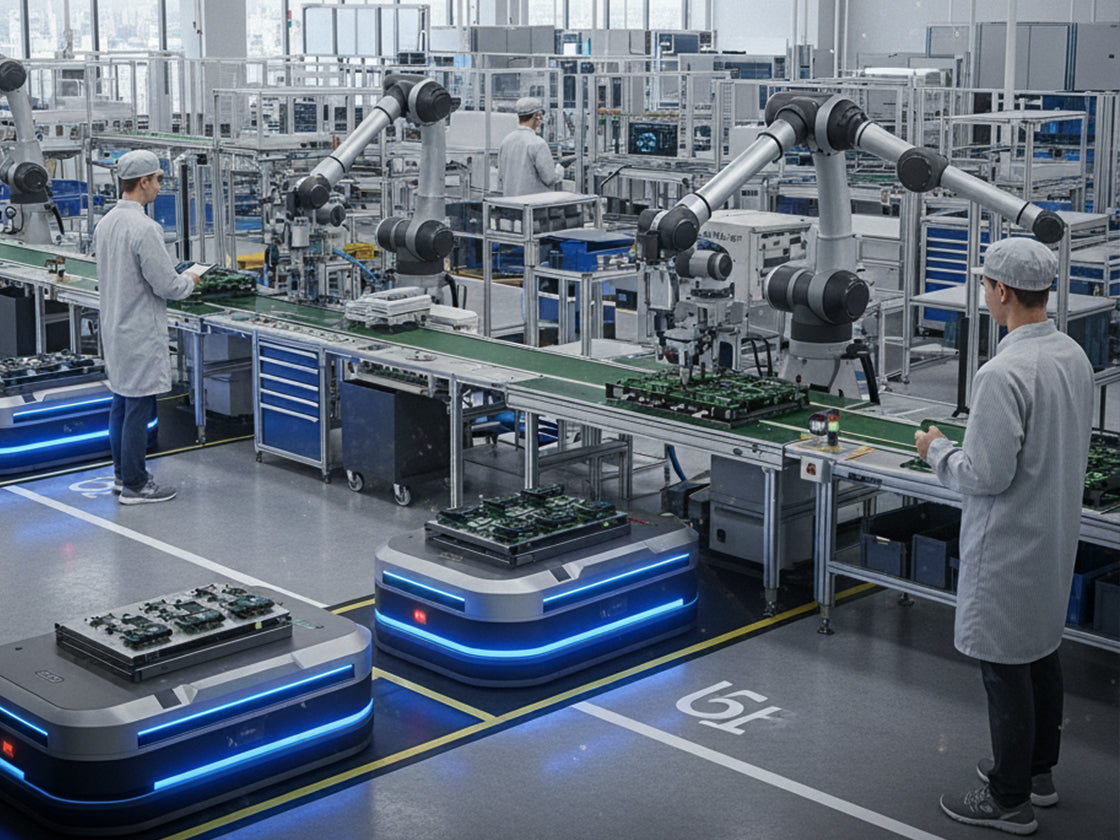

- Smart Manufacturing: Transform manual workflows into IMU datasets that serve as the foundation for robotic learning.

- Human-Robot Collaboration Training: Supports imitation learning, posture classification, and fall prediction.

- Hazardous Environment Replacement: Data-driven robotics enable safe execution of high-risk or specialized operations.

- AI & XR Research: Comprehensive support for digital twin modeling, simulation testing, and interactive applications.

- Motion-Driven Control: Convert MoCap posture data into control signals to drive biomimetic robots and robotic arms—seamlessly enabling simulation → training → deployment, with full digital-twin and control integration.

- AI-Enhanced Perception: Provides real-time human joint data for training and inference, such as fall prediction and posture classification, giving AI models more timely and accurate motion insights—even on low-latency edge devices.

How to Use MOXI’s V100-R for Robot Training

- A human operator wearing the R100-V suit controls robot motion in real time, capturing precise motion data.

- During remote operation, the robot collects sensor data to deepen its understanding of movement and surroundings.

- Merging motion and sensor data enables AI-driven deep learning to refine robotic movement patterns.

- The robot autonomously learns and performs actions under diverse conditions, powered by AI insights.

Key Use Cases

Smart Manufacturing

Convert operator workflows into IMU action datasets that robots can learn from.

Human-Robot Collaboration Training

Use fused nine-axis IMU data for better posture information and enhanced imitation learning.

Customer care

Utilize motion data to drive robots in executing high-risk or complex tasks.

AI & XR Research

Support applications in digital double modeling, simulation testing, and immersive interactive scenarios.

Business Strategy & Real-World Applications

| Business Aspect | Strategic Positioning | Strategic Positioning Target Industries / Applications | Industry Advantage & Value Proposition |

|---|---|---|---|

| Industry Role Positioning | 「A Modular Human Motion Input Interface」 → providing IMU MoCap outputs that integrate seamlessly into control and learning workflows | Humanoid robot development, AI training platforms | A universal, wearable, and cost-effective physical motion input solution with strong cross-platform integration (Jetson / ROS2 / Isaac) |

| Technology Licensing & SDK Services | Offering a ROS2 SDK that integrates with Isaac Sim / Jetson | Robotics hardware & software developers, OEM/ODM partners | Empowers third-party products to rapidly integrate IMU-based control features, creating new OEM/ODM business opportunities |

| Training Data Collaboration | Supplying high-precision human motion datasets for AI model training and biomimetic learning (e.g., imitation learning) | AI research institutes, robotics training platforms | Provides reliable, labeled human motion data—ideal for building digital twins, autonomous control models, and realistic biomimetic learning |

| Standard Solution Advancement | Delivering the “MOXI + Jetson/ROS2” standard development kit | Developer communities, industry prototyping & validation | Lowers development barriers, enables rapid prototyping and deployment, while boosting brand visibility and community adoption |